AlexsDesignStudy

m (→SiteSearcher) |

m (Reverted edits by Ebybymic (Talk); changed back to last version by Warwick Irwin) |

||

| (32 intermediate revisions by 4 users not shown) | |||

| Line 4: | Line 4: | ||

The main problem is how to dynamically allocate object handlers. These handlers will preform actions on the content that is downloaded. | The main problem is how to dynamically allocate object handlers. These handlers will preform actions on the content that is downloaded. | ||

| + | |||

| + | [[Image:AlexInterface.png]] | ||

| + | |||

| + | It can be obtained here [http://wikisend.com/download/652258/SiteSearcher.zip| SiteSearcher on Wikisend] with the password UC | ||

| + | |||

| + | ==Goals== | ||

| + | *Instead of producing a large mess of highly coupled code (as is so common in security tools) to produce a tool that I can update to add new behaviour with minimal effort. | ||

| + | *Make sure I have a good grasp of UML modelling, something which previously has leaked out of my brain like a very thin custard through a well worn sock. | ||

| + | *To force some good practices into my head by implementing them. | ||

| + | |||

| + | ==C#== | ||

| + | There are some language specific constructs that must be remembered. | ||

| + | *readonly modifier - from the MSDN library "The readonly keyword is a modifier that you can use on fields. When a field declaration includes a readonly modifier, assignments to the fields introduced by the declaration can only occur as part of the declaration or in a constructor in the same class." - [http://msdn.microsoft.com/en-us/library/acdd6hb7%28VS.71%29.aspx] | ||

| + | *protected variables are implemented in a sane manner! [http://msdn.microsoft.com/en-us/library/ba0a1yw2.aspx]. This allows me to emulate a object based architecture such as those in ruby or smalltalk, without having to use ruby or smalltalk. | ||

==UML Overview== | ==UML Overview== | ||

[[Image:AlexUML1.png]] | [[Image:AlexUML1.png]] | ||

| − | This was a poor solution for example the job of creating an ObjectHandler took 30 lines of fiddly code. So the structure was updated. | + | This was a poor solution, for example the job of creating an ObjectHandler took 30 lines of fiddly code. So the structure was updated. |

| + | |||

| + | ---- | ||

[[Image:AlexUML2.png]] | [[Image:AlexUML2.png]] | ||

==Patterns== | ==Patterns== | ||

| − | + | In this design several common patterns are used: | |

*[[Singleton]] - This pattern is in the ConnectionPool class. The Connection pool handles a global tally of connections to insure that we do not in inadvertently DoS a website. The ObjectHandlerFactory is also a Singleton because the initialization only need be a once off affair. | *[[Singleton]] - This pattern is in the ConnectionPool class. The Connection pool handles a global tally of connections to insure that we do not in inadvertently DoS a website. The ObjectHandlerFactory is also a Singleton because the initialization only need be a once off affair. | ||

*[[Facade]] - The NetUtils class is a facade that simplifies the process of dealing with HTTP connections. | *[[Facade]] - The NetUtils class is a facade that simplifies the process of dealing with HTTP connections. | ||

*[[Strategy]] - The ObjectHandler and all it's subclasses act as a Strategy for dealing with files that are discovered. | *[[Strategy]] - The ObjectHandler and all it's subclasses act as a Strategy for dealing with files that are discovered. | ||

| + | *[[Object_pool|Object pool]] - The ConnectionPool is unsurprisingly an implementation of the object pool pattern. | ||

| + | *[[Factory_Method|Factory Method]] - The ObjectHandlerFactory is similar but not exactly a factory method. The purpose of this class is to encapsulate the behaviour needed to create ObjectHandlers. However the products in essence build themselves with supervision from the factory class instead of having concrete factories and concrete products. | ||

==Major Classes== | ==Major Classes== | ||

| Line 23: | Line 41: | ||

===NetUtilsResponse=== | ===NetUtilsResponse=== | ||

| − | This class is nothing if not a data class. It is created and returned from the NetUtils class. It contains the URL that was accessed, the local path it was saved to, a HTTP status string, and a bool that indicates success or failure. | + | This class is nothing if not a data class. It is created and returned from the NetUtils class. It contains the URL that was accessed, the local path it was saved to, a HTTP status string, and a bool that indicates success or failure. This class was created to avoid the [[Data_clumps_smell|data clumps smell]]. |

===BruteForm=== | ===BruteForm=== | ||

| Line 35: | Line 53: | ||

===ObjectHandler=== | ===ObjectHandler=== | ||

| − | ObjectHandlers as the name implies handle downloaded objects. The may process them in any way they wish. All object handlers must override the goodProcessor method and implement the startProcessing method. | + | ObjectHandlers as the name implies handle downloaded objects. The may process them in any way they wish. All object handlers must override the goodProcessor method and implement the startProcessing method. goodProcessor is a static method that returns true if the processor wishes to handle the data object it was passed. This class is abstract to conform to the [[No_concrete_base_classes|no concrete base classes]] maxim and the [[Stable_abstractions_principle|stable abstractions principle]]. |

| + | |||

| + | If we are to use the idea of design by contract then the contract for an ObjectHandler is very simple. An object handler will process a file in some manner. When this processing is over the file will remain in the same position in the file system and be unlocked. The goodProcessor method will return true if the handler is sure it is able to process the file otherwise it will return false. | ||

====PageRebuilder==== | ====PageRebuilder==== | ||

| − | The page rebuilder uses the HtmlAgilityPack framework to parse out the sources of images referenced by the page in question. These images are then saved using NetUtils::SaveFile. The HTML structure is then updated to load these images from the local system. | + | :The page rebuilder uses the HtmlAgilityPack framework to parse out the sources of images referenced by the page in question. These images are then saved using NetUtils::SaveFile. The HTML structure is then updated to load these images from the local system. |

====ImageHandler==== | ====ImageHandler==== | ||

| − | The image handler is a proof of concept class to show that the ObjectHandler system is extensible. This class takes an image and produces a thumbnail 300pixels wide that is placed side by side with its source. | + | :The image handler is a proof of concept class to show that the ObjectHandler system is extensible. This class takes an image and produces a thumbnail 300pixels wide that is placed side by side with its source. |

===ObjectHandlerFactory=== | ===ObjectHandlerFactory=== | ||

| Line 47: | Line 67: | ||

==Criticisms== | ==Criticisms== | ||

| − | *The is no clear model view separation. The classes MainForm and BrtueForm have components that make up the graphical user interface the forms also hold part of the model. This is poor practice because the Model can not exist without the view. In order to decouple these sections would mean creating two more classes (main and bruteforcer) and making the forms listen to them. This however is somewhat overcomplicated due to threading issues. For this reason the simple but less extensible option was chosen. | + | *The is no clear [[Presentation_separation_idiom|model view separation]]. The classes MainForm and BrtueForm have components that make up the graphical user interface the forms also hold part of the model. This is poor practice because the Model can not exist without the view. In order to decouple these sections would mean creating two more classes (main and bruteforcer) and making the forms listen to them. This however is somewhat overcomplicated due to threading issues. For this reason the simple but less extensible option was chosen. |

| + | |||

| + | *While for the most part the design follows the [[Encapsulate_Field|encapsulate field]] principle. The NetHandlerResponse class completely exposes all of its members. These however are protected using the C# readonly keyword. This means that members can only be set in the object constructor thus we are not [[Don't_expose_mutable_attributes|exposing our mutable attributes]]. We do however lose the ability to deny access to the data. | ||

| + | |||

| + | *The BruteForm class seems to have a [[Large_class_smell|large class smell]]. This class could possibly be split to have all string parsing methods (regular expression handling) in a desperate utility class. Preforming this split however goes against Reil's [[Keep_related_data_and_behavior_in_one_place|group related data and behaviour]] idea. However the [[Separation_of_concerns|separation of concerns]] maxim supports a split. | ||

| + | |||

| + | *There is duplication of behaviour, both the PageRebuilder and the BruteForm download items then request handlers to be created for these items. Maybe this behaviour should be encapsulated inside the NetUtils class. This introduces the possibility of infinite recursion attempting to mirror the entire internet. | ||

| + | |||

| + | *The contents of NetUtilResponses are broken up and passed to methods in ObjectHandler factory. This feels like a bad idea. Two maxims support my feelings here, the [[Reduce_the_number_of_arguments|reduce the number of arguments]] maxim and the [[Encapsulation_boundary|encapsulation boundary]] maxim. Basically by splitting up the attributes of the class into primitives I have to pass more arguments and secondly I am breaking the Objects encapsulation boundary. | ||

| + | |||

| + | |||

| + | ==A New Design== | ||

| + | |||

| + | ===Round 1=== | ||

| + | To address these problems a slightly updated design was produced. | ||

| + | |||

| + | ====UML==== | ||

| + | [[Image:AlexUML3.png]] | ||

| + | |||

| + | ====Improvements==== | ||

| + | *PageRebuilders no longer contain other ObjectHandlers. The only class to request ObjectHandlers is NetUtils. This is a slightly cleaner approach. | ||

| + | *The ObjectHandlerFactory::getHandler and ObjectHandler::goodProcessor methods now take a full NetUtilResponse object and can make more intelligent decisions. We also avoid breaking the encapsulation. | ||

| + | *NetUtilResponse now contains the content type reported in the HTTP header. As these are only created inside NetUtils little effort was needed in this refactoring. | ||

| + | *To deal with the possibility of infinite recursion ObjectHandlers must now override the canRecurse method stating if they can cause recursion. ObjectHandlerFactory now allows it's clients to specify whether or not to ignore ObjectHandlers which can recurse. This is an extension to the ObjectHandler contract. | ||

| + | |||

| + | ====Problems==== | ||

| + | *NetUtilResponses are being passed all over the place making the structure look very "busy". Most classes exhibit some coupling with NetUtilResponse. This may not be a large issue as it is unlikely to change. | ||

| + | |||

| + | |||

| + | ===Round 2=== | ||

| + | |||

| + | ====UML==== | ||

| + | [[Image:AlexUML4.png]] | ||

| + | |||

| + | ====Improvements==== | ||

| + | *The UML now reflects that PageRebuilders rely on a external package called the HTML agility pack, unfortunately NClass (my UML tool of choice) does not visualise inter-package relationships so the package has been represented as a class. | ||

| + | *CssHandlers now exist. These are similar to page handlers in that they find all the images in a CSS stylesheet and save them into the local file system using NetUtils methods. The CssHandler should not be capable of recursion as it specifies to NetUtils to only use recursion free subhandlers. | ||

| + | *The PageRebuilder::pageText attribute was dropped as holding a whole file in a string is nasty. This could be called a [[Primitive_obsession_smell|primitive obsession smell]] as HTML page is a form of tree not a flat file and should be represented as such. | ||

| + | |||

| + | ====Criticisms==== | ||

| + | *While unlikely it is possible that a CSS stylesheet could contain a url('') link to another CSS stylesheet which in turn referred back. This would cause a infinite recursion of CssHandler creation. CssHandler therefore should be updated to state that it is capable of recursion. Without this precaution webmasters could intentionally crash SiteSearcher. | ||

| + | *References to worker threads are not kept. This means it is impossible to terminate or pause these threads once they have begun operating. | ||

| + | |||

| + | ==Future Additions== | ||

| + | It would be nice if the state of a search could be saved and then resumed at a later time as these searches can take a lot of time and generate a lot of traffic. The solution would be to use a [[Visitor]] pattern. This visitor would find all instances of classes from this namespace using Reflection. These classes would then be visited and their contents written to an XML file. As different parts of searches happen across many different threads all running threads would first have to be stopped. Finding these threads would be difficult. This is a powerful argument as to use a second [[Object_pool|object pool]] to contain all the worker threads. If a class was dedicated to handling the thread pool then its responsibilities could include pausing all threads and also handling rebuilding and resuming them upon a search being loaded. | ||

| − | + | ==Conclusions== | |

| + | The design of this program has proved to be fairly extensible during its development. Two factors could be at play. Possibly the problem domain is fairly simple. Or hopefully because the design is good. In my opinion the design finds a good position somewhere in between the extremities expressed by most design maxims. Most of the design was motivated by the concept of [[Keep_it_simple|keep it simple]]. Additions were made when the design showed shortcomings. These additions avoided becoming [[Shotgun_surgery_smell|shotgun surgery]] as the level of coupling between most sections of the program has been kept minimal. The design follows the [[Law of Demeter]] as no classes communicate via other a third party. | ||

| − | + | There are no particularly [[Long_method_smell|long methods]] but there are some [[Large_class_smell|large classes]] notably BruteForm. This should definitely be refactored into a model section and a view section. Having the view listen to the brute forcer would be a simple solution but would not [[Don't_burn_your_base_class|burn the base class]]. While C# does not support multiple inheritance it provides a mechanism called events to implement the [[Observer|observer]] pattern. | |

Latest revision as of 03:08, 25 November 2010

Contents |

SiteSearcher

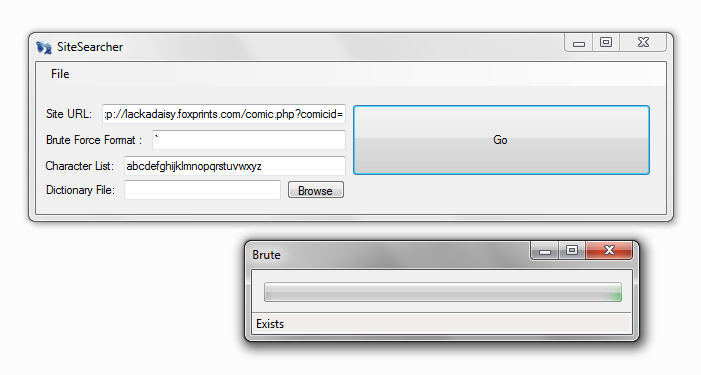

My design study is on a C# application built for the 400 level secure software course. The application scans websites in a brute force manner trying to find common URL components. When a page or object is found it is saved. If the object is an HTML file then it is scanned and dependencies are downloaded onto the local file system. The HTML page is then transformed to link to these local entities.

The main problem is how to dynamically allocate object handlers. These handlers will preform actions on the content that is downloaded.

It can be obtained here SiteSearcher on Wikisend with the password UC

Goals

- Instead of producing a large mess of highly coupled code (as is so common in security tools) to produce a tool that I can update to add new behaviour with minimal effort.

- Make sure I have a good grasp of UML modelling, something which previously has leaked out of my brain like a very thin custard through a well worn sock.

- To force some good practices into my head by implementing them.

C#

There are some language specific constructs that must be remembered.

- readonly modifier - from the MSDN library "The readonly keyword is a modifier that you can use on fields. When a field declaration includes a readonly modifier, assignments to the fields introduced by the declaration can only occur as part of the declaration or in a constructor in the same class." - [1]

- protected variables are implemented in a sane manner! [2]. This allows me to emulate a object based architecture such as those in ruby or smalltalk, without having to use ruby or smalltalk.

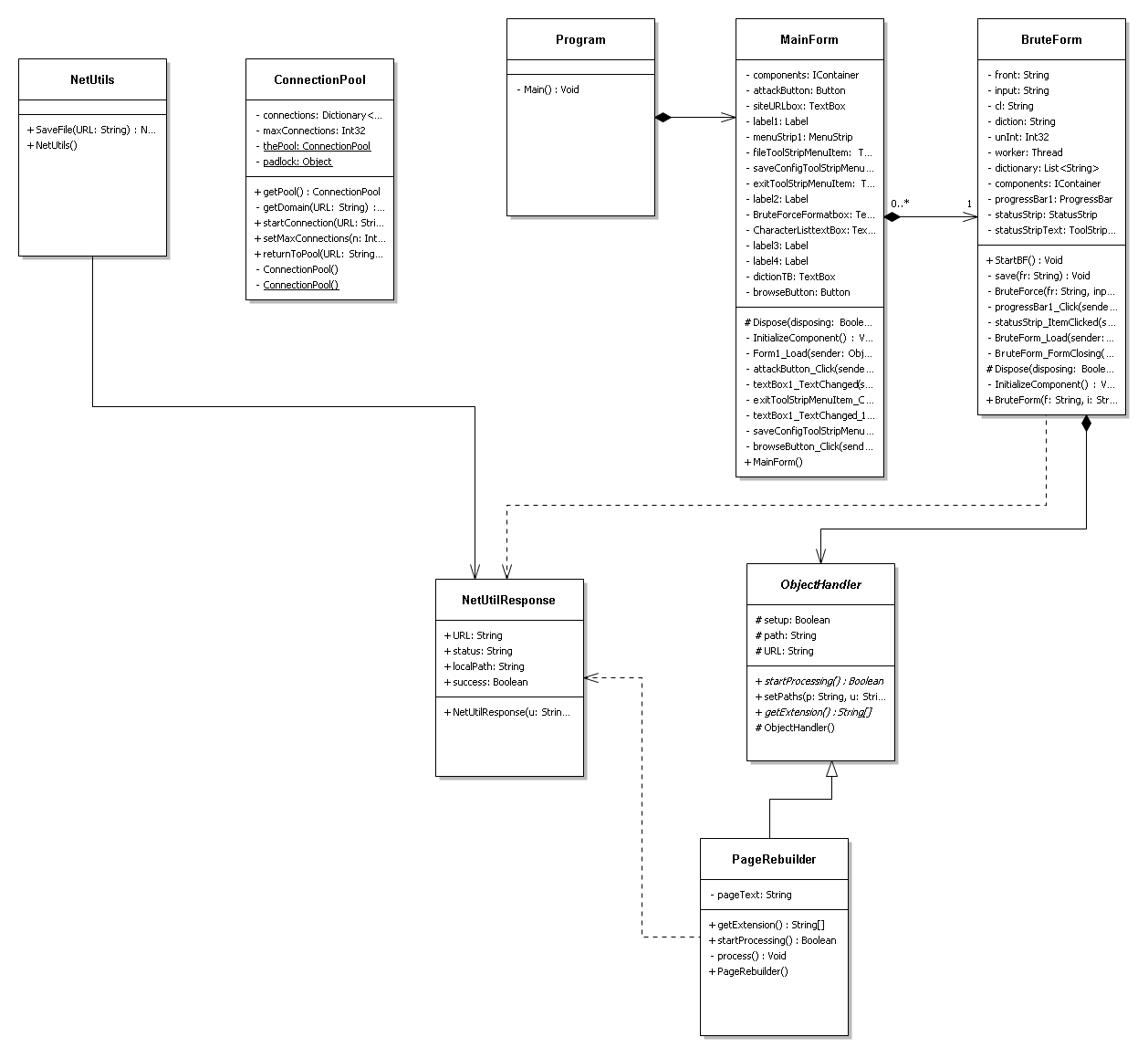

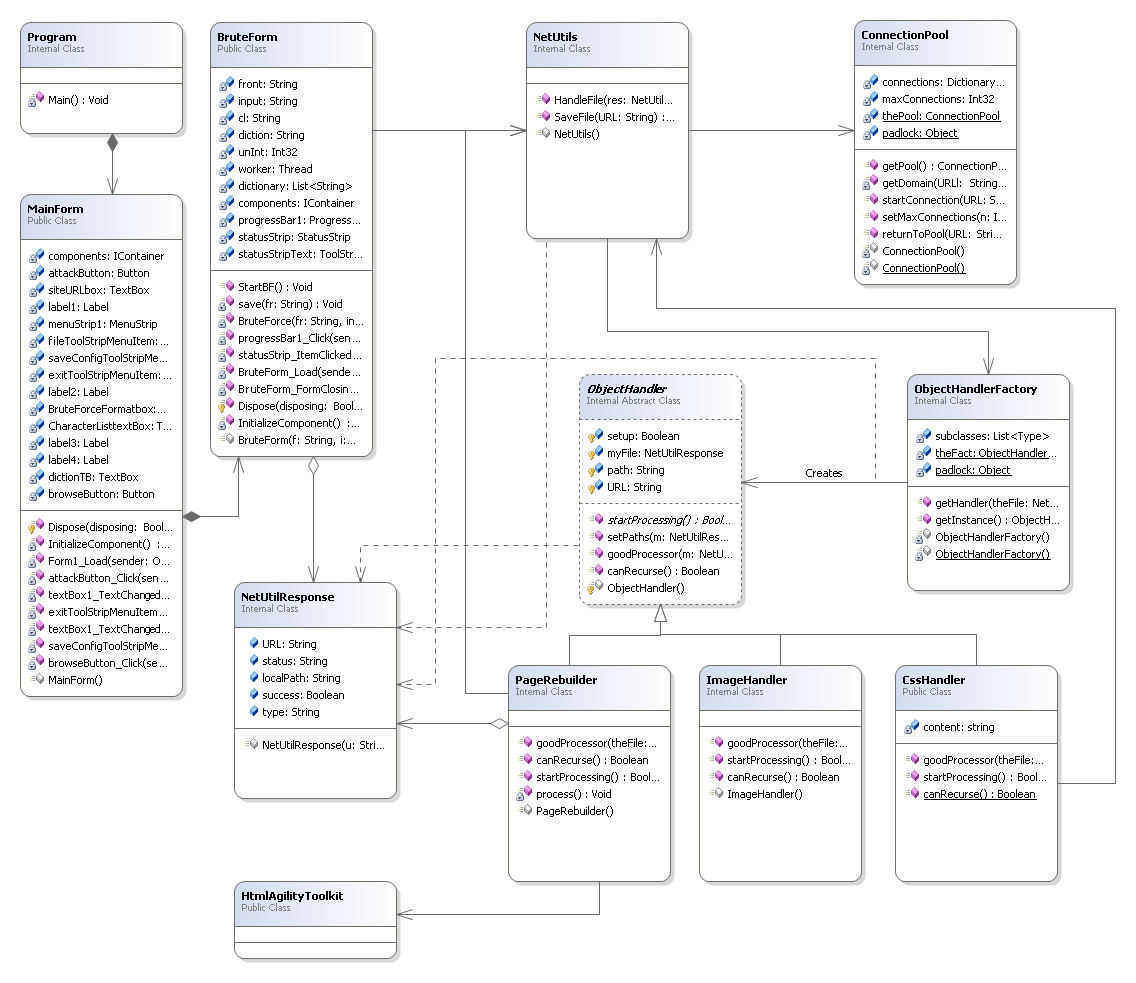

UML Overview

This was a poor solution, for example the job of creating an ObjectHandler took 30 lines of fiddly code. So the structure was updated.

Patterns

In this design several common patterns are used:

- Singleton - This pattern is in the ConnectionPool class. The Connection pool handles a global tally of connections to insure that we do not in inadvertently DoS a website. The ObjectHandlerFactory is also a Singleton because the initialization only need be a once off affair.

- Facade - The NetUtils class is a facade that simplifies the process of dealing with HTTP connections.

- Strategy - The ObjectHandler and all it's subclasses act as a Strategy for dealing with files that are discovered.

- Object pool - The ConnectionPool is unsurprisingly an implementation of the object pool pattern.

- Factory Method - The ObjectHandlerFactory is similar but not exactly a factory method. The purpose of this class is to encapsulate the behaviour needed to create ObjectHandlers. However the products in essence build themselves with supervision from the factory class instead of having concrete factories and concrete products.

Major Classes

NetUtils

This class contains static methods that are common across several classes. These methods are a façade abstracting the System.IO and System.Net libraries to an interface that is specific to HTTP get operations.

NetUtilsResponse

This class is nothing if not a data class. It is created and returned from the NetUtils class. It contains the URL that was accessed, the local path it was saved to, a HTTP status string, and a bool that indicates success or failure. This class was created to avoid the data clumps smell.

BruteForm

The brute form contains all the code involved in the parsing of attack strings. The NetUtils class is used to retrieve objects once the URL is determined. Additional processing is preformed by ObjectHandlers which are created dynamically based on the type of file that has been discovered.

Program

This class is a hangover from the default C# template program.

ConnectionPool

The connection pool is a singleton class that maintains the a list of all active connections. The class simply holds a HashMap of strings. When a URL is requested from the ConnectionPool it first trims the URL down to the target domain. This string is then tested against HashMap. If the map does not contain the string it is entered. If it does then the value is checked against the ConnectionPool's maxConnetions member. The method returns true if a connection is allowed and false if it is not.

ObjectHandler

ObjectHandlers as the name implies handle downloaded objects. The may process them in any way they wish. All object handlers must override the goodProcessor method and implement the startProcessing method. goodProcessor is a static method that returns true if the processor wishes to handle the data object it was passed. This class is abstract to conform to the no concrete base classes maxim and the stable abstractions principle.

If we are to use the idea of design by contract then the contract for an ObjectHandler is very simple. An object handler will process a file in some manner. When this processing is over the file will remain in the same position in the file system and be unlocked. The goodProcessor method will return true if the handler is sure it is able to process the file otherwise it will return false.

PageRebuilder

- The page rebuilder uses the HtmlAgilityPack framework to parse out the sources of images referenced by the page in question. These images are then saved using NetUtils::SaveFile. The HTML structure is then updated to load these images from the local system.

ImageHandler

- The image handler is a proof of concept class to show that the ObjectHandler system is extensible. This class takes an image and produces a thumbnail 300pixels wide that is placed side by side with its source.

ObjectHandlerFactory

This class is a singleton class. The object handler factory dynamically creates a ObjectHandler that is best suited to the content it is passed. This is accomplished by using Reflection to find all subclasses of ObjectHandler. Each of these subclasses is then passed the local address and source URL of the file that needs to be processed. This is accomplished with the ObjectHandler::goodProcessor method. If a subclass of ObjectHandler believes it can Handle the content it will return true. The first class to return true is then instantiated and returned to the caller who requested the creation of a handler. Thus the ObjectHandlerFactory completely handles all the reflection tasks required to dynamically and correctly build and initialise ObjectHandlers.

Criticisms

- The is no clear model view separation. The classes MainForm and BrtueForm have components that make up the graphical user interface the forms also hold part of the model. This is poor practice because the Model can not exist without the view. In order to decouple these sections would mean creating two more classes (main and bruteforcer) and making the forms listen to them. This however is somewhat overcomplicated due to threading issues. For this reason the simple but less extensible option was chosen.

- While for the most part the design follows the encapsulate field principle. The NetHandlerResponse class completely exposes all of its members. These however are protected using the C# readonly keyword. This means that members can only be set in the object constructor thus we are not exposing our mutable attributes. We do however lose the ability to deny access to the data.

- The BruteForm class seems to have a large class smell. This class could possibly be split to have all string parsing methods (regular expression handling) in a desperate utility class. Preforming this split however goes against Reil's group related data and behaviour idea. However the separation of concerns maxim supports a split.

- There is duplication of behaviour, both the PageRebuilder and the BruteForm download items then request handlers to be created for these items. Maybe this behaviour should be encapsulated inside the NetUtils class. This introduces the possibility of infinite recursion attempting to mirror the entire internet.

- The contents of NetUtilResponses are broken up and passed to methods in ObjectHandler factory. This feels like a bad idea. Two maxims support my feelings here, the reduce the number of arguments maxim and the encapsulation boundary maxim. Basically by splitting up the attributes of the class into primitives I have to pass more arguments and secondly I am breaking the Objects encapsulation boundary.

A New Design

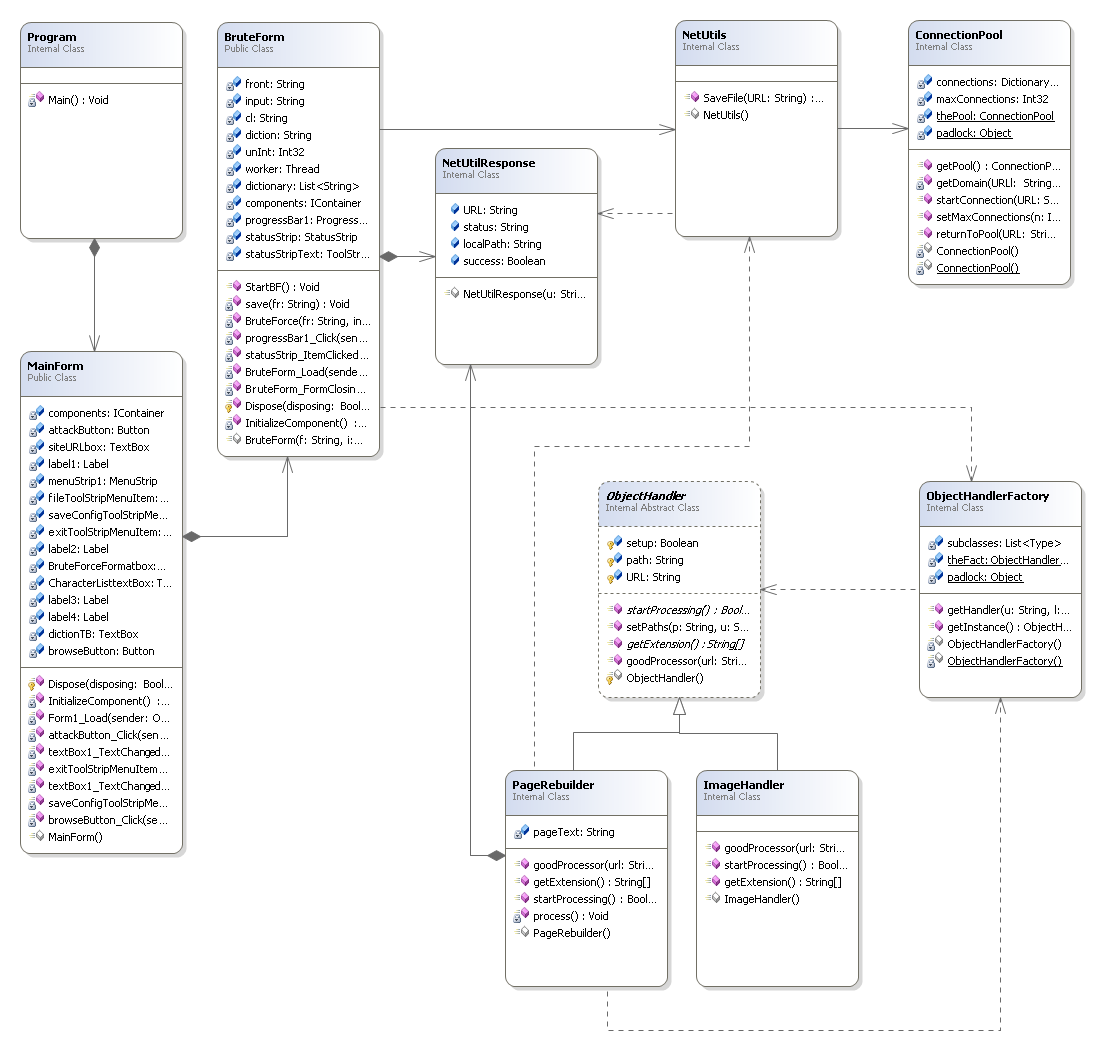

Round 1

To address these problems a slightly updated design was produced.

UML

Improvements

- PageRebuilders no longer contain other ObjectHandlers. The only class to request ObjectHandlers is NetUtils. This is a slightly cleaner approach.

- The ObjectHandlerFactory::getHandler and ObjectHandler::goodProcessor methods now take a full NetUtilResponse object and can make more intelligent decisions. We also avoid breaking the encapsulation.

- NetUtilResponse now contains the content type reported in the HTTP header. As these are only created inside NetUtils little effort was needed in this refactoring.

- To deal with the possibility of infinite recursion ObjectHandlers must now override the canRecurse method stating if they can cause recursion. ObjectHandlerFactory now allows it's clients to specify whether or not to ignore ObjectHandlers which can recurse. This is an extension to the ObjectHandler contract.

Problems

- NetUtilResponses are being passed all over the place making the structure look very "busy". Most classes exhibit some coupling with NetUtilResponse. This may not be a large issue as it is unlikely to change.

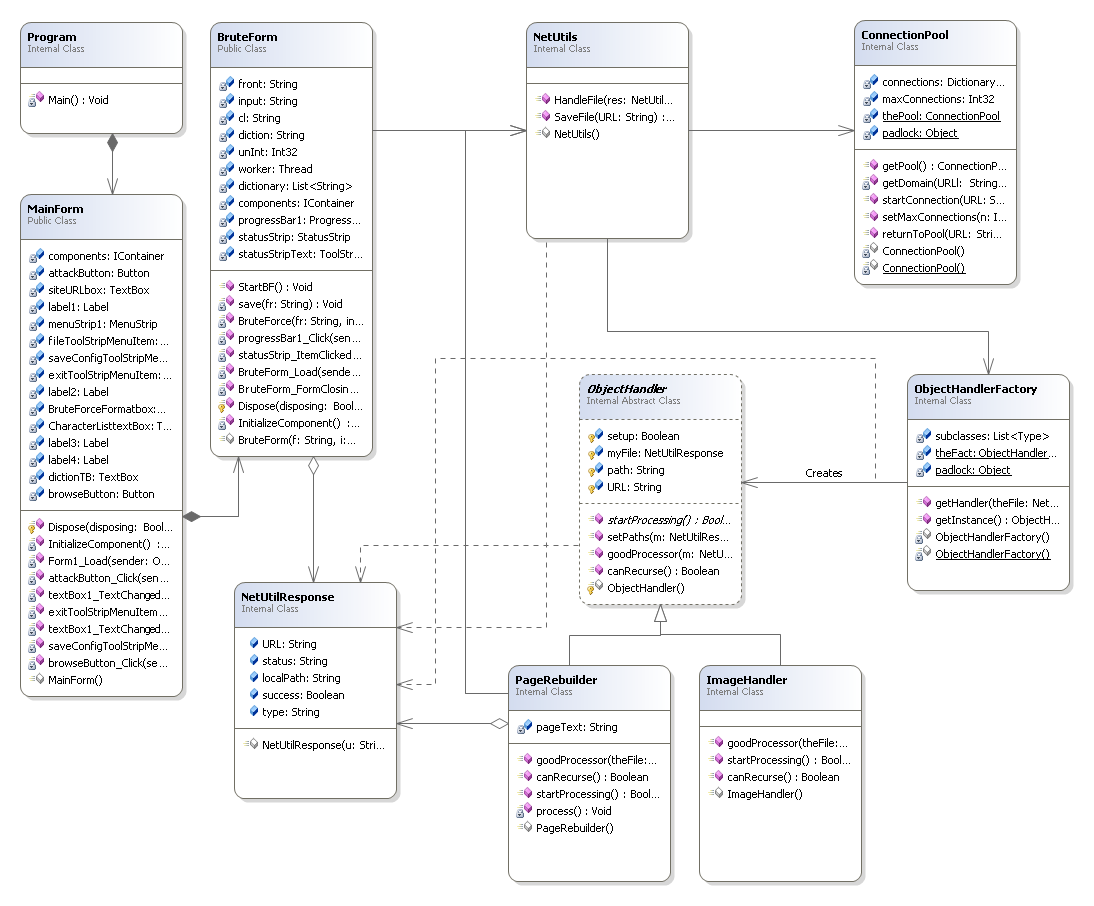

Round 2

UML

Improvements

- The UML now reflects that PageRebuilders rely on a external package called the HTML agility pack, unfortunately NClass (my UML tool of choice) does not visualise inter-package relationships so the package has been represented as a class.

- CssHandlers now exist. These are similar to page handlers in that they find all the images in a CSS stylesheet and save them into the local file system using NetUtils methods. The CssHandler should not be capable of recursion as it specifies to NetUtils to only use recursion free subhandlers.

- The PageRebuilder::pageText attribute was dropped as holding a whole file in a string is nasty. This could be called a primitive obsession smell as HTML page is a form of tree not a flat file and should be represented as such.

Criticisms

- While unlikely it is possible that a CSS stylesheet could contain a url() link to another CSS stylesheet which in turn referred back. This would cause a infinite recursion of CssHandler creation. CssHandler therefore should be updated to state that it is capable of recursion. Without this precaution webmasters could intentionally crash SiteSearcher.

- References to worker threads are not kept. This means it is impossible to terminate or pause these threads once they have begun operating.

Future Additions

It would be nice if the state of a search could be saved and then resumed at a later time as these searches can take a lot of time and generate a lot of traffic. The solution would be to use a Visitor pattern. This visitor would find all instances of classes from this namespace using Reflection. These classes would then be visited and their contents written to an XML file. As different parts of searches happen across many different threads all running threads would first have to be stopped. Finding these threads would be difficult. This is a powerful argument as to use a second object pool to contain all the worker threads. If a class was dedicated to handling the thread pool then its responsibilities could include pausing all threads and also handling rebuilding and resuming them upon a search being loaded.

Conclusions

The design of this program has proved to be fairly extensible during its development. Two factors could be at play. Possibly the problem domain is fairly simple. Or hopefully because the design is good. In my opinion the design finds a good position somewhere in between the extremities expressed by most design maxims. Most of the design was motivated by the concept of keep it simple. Additions were made when the design showed shortcomings. These additions avoided becoming shotgun surgery as the level of coupling between most sections of the program has been kept minimal. The design follows the Law of Demeter as no classes communicate via other a third party.

There are no particularly long methods but there are some large classes notably BruteForm. This should definitely be refactored into a model section and a view section. Having the view listen to the brute forcer would be a simple solution but would not burn the base class. While C# does not support multiple inheritance it provides a mechanism called events to implement the observer pattern.