LukasKorsikaDesignStudy

| Line 1: | Line 1: | ||

== The Problem == | == The Problem == | ||

| − | The project I | + | The project I am designing in this study is an application to help me manage my files. I tend to have a number of copies of the same file scattered throughout my various computers and hard drives. I'm sure some of you also have this problem. The reasons for why this occurs include: |

| − | * Some partitions are only accessible under Linux | + | * Some partitions/disks are only accessible under Linux, so I copy files over to Windows-accessible partitions. |

* I often copy videos to my laptop to watch away from my desk. | * I often copy videos to my laptop to watch away from my desk. | ||

| + | * As above, it's useful to have music on both my laptop and my desktop. | ||

| + | |||

| + | While there are clever solutions to this at the filesystem level and the like, these are far too complicated and unstable for me to use on an everyday basis. This program performs a much simpler task: It will go through my filesystem, and tell me which files are the same. I can then use this information to decide how to deal with the situation. | ||

| + | |||

| + | The input to the program is a list of files to be compared for duplication, and the output is a set of groups of identical files, with each group separated by a new line. Originally I wanted to pass in a list of paths, but this method allows me much more flexibility. Given that this program is intended to be used primarily under Linux, I can use a tool like ''find''(1) to generate the list of files I am interested in. This gives me the power to limit my comparisons to, for example, files with the .avi extension. Adding support for such advanced operation to this program would duplicate existing functionality, and violate the unix philosophy of Do One Thing, And Do It Well. (in OO terms, the [[Single responsibility principle]]). | ||

| + | |||

| + | It is based on an existing program I wrote in C many years ago (well, about three). The goal of this exercise is to iteratively improve the design to a level at which I am happy with it, and then implement it in C# (running under Mono). | ||

== Requirements == | == Requirements == | ||

| − | * Must take a list of files as input, and output | + | ''A basic description of requirements the solution must fulfill''. |

| − | * Must support a variety of approaches for determining equality -- | + | |

| − | * Must use reasonable amounts of memory | + | * Must take a list of files as input, and output groups of identical files |

| − | * Should be file-system agnostic ( | + | * Must support a variety of approaches for determining equality -- for instance, file size, hashes, modification time, and the raw file contents. |

| − | * Should be extensible | + | * Must use reasonable amounts of memory, I/O bandwidth, and time. |

| + | * Should be file-system agnostic (that is, it shouldn't be limited to one particular file system) | ||

| + | * Should be extensible, to ease implementation of possible future features. (the original does not fulfill this requirement). | ||

== Initial Design == | == Initial Design == | ||

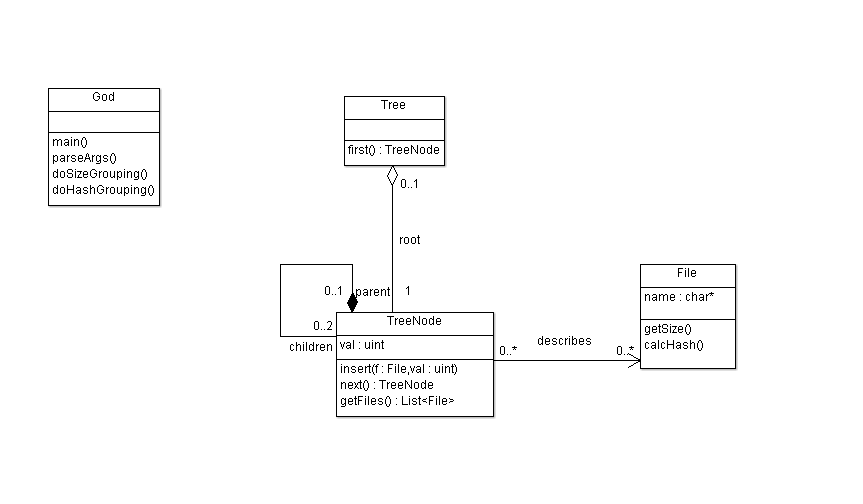

| − | ''(converted to Java from C, so some liberties have been taken with classes, but this is essentially its original form)'' | + | ''(converted to Java from the original C source, so some liberties have been taken with converting this into classes, but this is essentially its original form)'' |

[[image:Lko15-OldUML.png]] | [[image:Lko15-OldUML.png]] | ||

Revision as of 09:08, 29 September 2010

Contents |

The Problem

The project I am designing in this study is an application to help me manage my files. I tend to have a number of copies of the same file scattered throughout my various computers and hard drives. I'm sure some of you also have this problem. The reasons for why this occurs include:

- Some partitions/disks are only accessible under Linux, so I copy files over to Windows-accessible partitions.

- I often copy videos to my laptop to watch away from my desk.

- As above, it's useful to have music on both my laptop and my desktop.

While there are clever solutions to this at the filesystem level and the like, these are far too complicated and unstable for me to use on an everyday basis. This program performs a much simpler task: It will go through my filesystem, and tell me which files are the same. I can then use this information to decide how to deal with the situation.

The input to the program is a list of files to be compared for duplication, and the output is a set of groups of identical files, with each group separated by a new line. Originally I wanted to pass in a list of paths, but this method allows me much more flexibility. Given that this program is intended to be used primarily under Linux, I can use a tool like find(1) to generate the list of files I am interested in. This gives me the power to limit my comparisons to, for example, files with the .avi extension. Adding support for such advanced operation to this program would duplicate existing functionality, and violate the unix philosophy of Do One Thing, And Do It Well. (in OO terms, the Single responsibility principle).

It is based on an existing program I wrote in C many years ago (well, about three). The goal of this exercise is to iteratively improve the design to a level at which I am happy with it, and then implement it in C# (running under Mono).

Requirements

A basic description of requirements the solution must fulfill.

- Must take a list of files as input, and output groups of identical files

- Must support a variety of approaches for determining equality -- for instance, file size, hashes, modification time, and the raw file contents.

- Must use reasonable amounts of memory, I/O bandwidth, and time.

- Should be file-system agnostic (that is, it shouldn't be limited to one particular file system)

- Should be extensible, to ease implementation of possible future features. (the original does not fulfill this requirement).

Initial Design

(converted to Java from the original C source, so some liberties have been taken with converting this into classes, but this is essentially its original form)

Design Description

As this was a program in C, there is essentially a God Class, with a few helper classes and methods thereupon. The helper classes are:

- File -- This represents a file on the file system, and has methods to find its size, and its SHA-1 hash.

- Tree -- This is a simple class representing a Tree. A tree is composed of a set of TreeNode, and stores a reference to the root. It also has a prune method, which uses a recursive algorithm to remove all TreeNodes with one or fewer (<= 1) files in it.

- TreeNode -- A tree node represents a node in a binary tree, stores its key (which may be size or hash depending on the tree), and a list of all files which have that value. TreeNode has a number of recursive methods to iterate over the tree, get the list of files at that node, and insert a new file with a key recursively.

Most of the functionality occurs in functions which are placed in the God class to represent their being global functions. This god class handles the parsing of arguments, the reading of the file list from stdin, building groups of identical files, and outputting the result.

Grouping is performed by first building a grouping tree using the size as the key (it calls Tree.insert(myFile.getsize(), myFile);), then pruning the tree. It then iterates over the resulting tree (consisting of pairs of sizes and file lists) to find files that might be identical (as they have the same size).

For each of these groups it then constructs a new tree using the hash values as keys, prunes the tree, and outputs the files grouped by hash. Thus, the files are grouped by size and hash.

I realise that this is terrible design. This design study will iteratively improve the design, as well as creating a Java implementation of the program.

Criticisms

- Uses a God class -- many sub-issues to do with this.

- Separate functionality into appropriate classes.

- TreeNode deals with both maintaining collections of files, as well as implementing a binary tree. This violates the Single responsibility principle.

- Remove TreeNode, and instead make a Tree class that uses a MultiMap (which we probably have to implement. *sigh*, Java) to store the files.

- The prune method should be in TreeNode, to Keep related data and behavior in one place.

- This may have to stay this way, as rolling our own MultiMap with pruning support violates Single responsibility principle, and extending a MultiMap implementation would be Inheritance for implementation. Besides, given that a Tree is ComposedOf its MultiMap, this doesn't seem too bad.

- The God class shouldn't have to ask the File for its size/hash. It should instead tell the Tree to insert it using whatever hashing method that tree uses Tell, don't ask.

- A Tree could have a Classifier object which can be used for finding a given File's sorting key, allowing one to simply .Insert(File) into the tree. (In this case, Classifier is an interface, which would be implemented by various concrete classifiers such as SizeClassifier, HashClassifier, etc)

- The tree should know what it's grouping by rather than that information being implied by the variable storing the tree( eg Tree sizeTree) Keep related data and behavior in one place.

- (See above)

- The File class shouldn't calculate hashes Single responsibility principle

- Create a FileHasher, which can be instantiated by the HashClassifier. FileHasher knows about File, but File doesn't know about FileHasher Dependency inversion principle.

- It should be possible to add new key types to group by without modifying existing classes. Open closed principle Beware type switches

- Create a new concrete Classifier

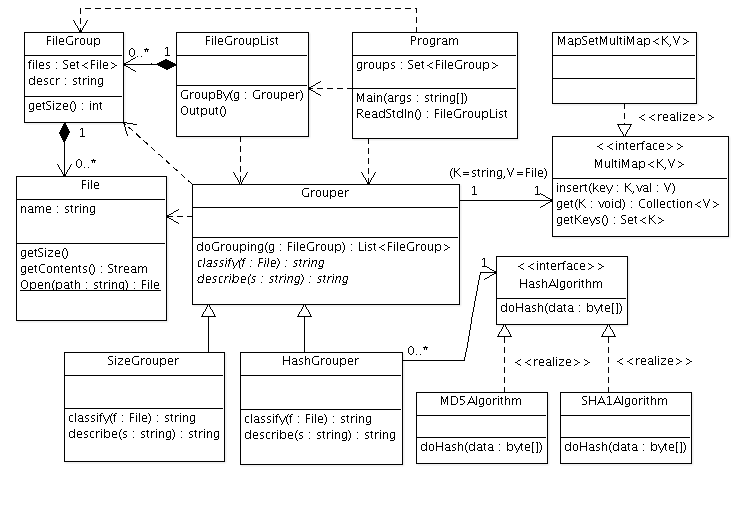

Revision One

The first modified version of the design, taking into account the above criticisms and suggestions.

This new design has moved most of the functionality out of the God class.

- The Program class is responsible for parsing the command line arguments and standard input. It also maintains a list of file groups, and outputs them at the end.

- The FileGroup class maintains a list of files which are supposed to be identical, as well as a textual description of the properties which lead us to suppose they are identical. In the current version of the program, this means they store a string of the format "Size: xKB" or "Size: xKB, Hash: ab2234989797b...".

- The File class is the same as above, except that the hash method has been moved to the MD5 class, as the File class should have a single responsibility.

- The actual data storage component of a Tree has been separated out into a generic, reusable MultiMap, and Tree has been renamed to Grouper, as this is a more accurate description.

- Grouper is an abstract class which implements the grouping logic. It has an abstract classify method which is overwritten by subclasses. Any Files which have the same return value for classify() are grouped together. Describe can be called on the return value of classify() to generate a human-readable representation of the classification (such as "Size: 103KB")

- Program begins by initialising groups member to the list of files read on stdin. Then each call to groupBy(Grouper) iterates over each group, and further subdivides it according to the given Grouper.