Terrain Renderer Design

| Line 4: | Line 4: | ||

Here is the initial design of the texturing side of the Terrain Renderer. The main piece of code contains one of each kind of Texture. The rendering can then be switched between each texture at runtime. Here's an overview of what each Texture does. | Here is the initial design of the texturing side of the Terrain Renderer. The main piece of code contains one of each kind of Texture. The rendering can then be switched between each texture at runtime. Here's an overview of what each Texture does. | ||

| − | * ProceduralTexture - A texture made up of multiple images. When created, the images are arranged and blended based on the terrain heightmap (higher regions have snow, lower regions have grass etc). This texture is applied 1-1 to the terrain. | + | * '''ProceduralTexture''' - A texture made up of multiple images. When created, the images are arranged and blended based on the terrain heightmap (higher regions have snow, lower regions have grass etc). This texture is applied 1-1 to the terrain. |

| − | + | * '''DetailMap''' - A DetailMap augments the ProceduralTexture method by using hardware Multitexturing to blend some small details to the terrain. The details are repeated a set amount across the terrain. | |

| − | * DetailMap - A DetailMap augments the ProceduralTexture method by using hardware Multitexturing to blend some small details to the terrain. The details are repeated a set amount across the terrain. | + | * '''TriGridTexture''' - Mainly for debugging, a TriGridTexture is created mathematically and applied to each polygon of the terrain individually. This way the polygon structure and number can be seen easily. |

| − | + | * '''BasicTexture''' - An almost empty class which implements the simplest kind of texture. One that is loaded from a file. However, this particular Texture doesn't know how to draw itself. It is only used by ShaderSplat. | |

| − | * TriGridTexture - Mainly for debugging, a TriGridTexture is created mathematically and applied to each polygon of the terrain individually. This way the polygon structure and number can be seen easily. | + | * '''ShaderSplat''' - ShaderSplat performs texturing and lighting using Shaders. It doesn't actually contain any texture information, instead it passes some BasicTextures through to the Shaders to process. |

| − | + | ||

| − | * BasicTexture - An almost empty class which implements the simplest kind of texture. One that is loaded from a file. However, this particular Texture doesn't know how to draw itself. It is only used by ShaderSplat. | + | |

| − | + | ||

| − | * ShaderSplat - ShaderSplat performs texturing and lighting using Shaders. It doesn't actually contain any texture information, instead it passes some BasicTextures through to the Shaders to process. | + | |

In addition to this there is the shader code which consists of two files, a vertex shader and a fragment shader which are compiled and linked at runtime. | In addition to this there is the shader code which consists of two files, a vertex shader and a fragment shader which are compiled and linked at runtime. | ||

| Line 20: | Line 16: | ||

At this stage, Texture seems to have several responsibilities and is therefore a violation of the [[Single responsibility principle]]. It is responsible for the OpenGL representation of a texture in video memory as well as how textures are applied to the terrain surface. This may not seem all that terrible, but it limits the capabilities of some of the methods. For example, in the case of the DetailMap, it can only contain a ProceduralTexture but there is no particularly good reason for this to be. If we allow it to contain an abstract Texture, this would allow it to contain a ShaderSplat Texture. Since both these Textures use the graphics hardware directly, it is extremely unlikely that these would cooperate. | At this stage, Texture seems to have several responsibilities and is therefore a violation of the [[Single responsibility principle]]. It is responsible for the OpenGL representation of a texture in video memory as well as how textures are applied to the terrain surface. This may not seem all that terrible, but it limits the capabilities of some of the methods. For example, in the case of the DetailMap, it can only contain a ProceduralTexture but there is no particularly good reason for this to be. If we allow it to contain an abstract Texture, this would allow it to contain a ShaderSplat Texture. Since both these Textures use the graphics hardware directly, it is extremely unlikely that these would cooperate. | ||

| − | ShaderSplat also seems to be | + | ShaderSplat also seems to be exhibit the [[Large class smell]]. In particular it currently keeps track of the location of each uniform variable in the shader code as seperate variables. It also contains multiple other textures (not seperate Texture classes) to do its job, such as a normal map (which is technically lighting data, but is stored in a texture format and can still be displayed like any other texture). It also has a fairly large interface (which could possibly be a [[Fat interfaces|Fat interface]]), since all the interactive pieces of the shader are passed through this object (such as whether certain features are enabled and parameters like the height levels to assign different textures to). |

| Line 26: | Line 22: | ||

[[Image:texture2.png|thumb]] | [[Image:texture2.png|thumb]] | ||

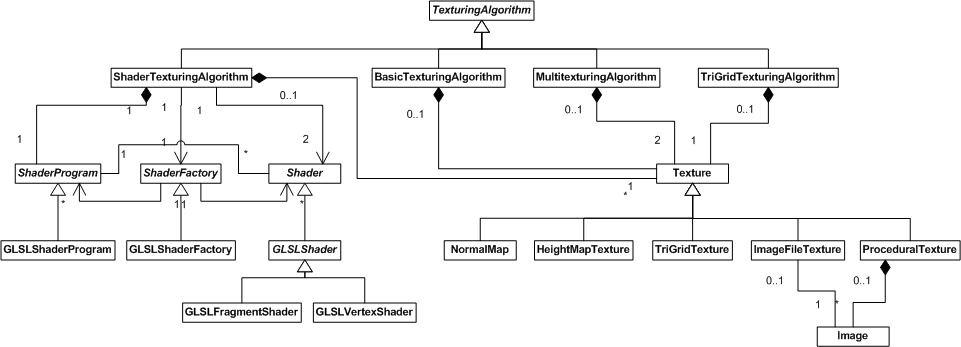

| − | To seperate the two responsibilities of Texture, I created another hierarchy, called TexturingAlgorithm. As the name suggests, it was inspired by the GoF [[Strategy]] design pattern. Although not visible in the diagram, a number of TexturingAlgorithms are contained within the terrain rendering code. These algorithms can be switched at runtime (however, they need to be told this explicitly using the use() and clear() methods to setup and cleanup respectively). In doing this I have | + | To seperate the two responsibilities of Texture, I created another hierarchy, called TexturingAlgorithm. As the name suggests, it was inspired by the GoF [[Strategy]] design pattern as well as the [[State]] pattern. This is because although it represents a particular way of texturing, it also defines the OpenGL state for that method. The state is a combination of which Textures are assigned and whether shaders are enabled. useCoordsForPoint() is the main operation it is responsible for, where texture coordinates are generated for the particular vertex. Although not visible in the diagram, a number of TexturingAlgorithms are contained within the terrain rendering code. These algorithms can be switched at runtime (however, they need to be told this explicitly using the use() and clear() methods to setup and cleanup respectively, since it would be far too costly to do this for every vertex of every frame). In doing this I have essentially created classes defined by their behaviour, which is basically the same as using a verb for the class name (I suppose Texture would be the name if used as a verb, which would be confusing). |

| − | One interesting thing to note is that we now have a distinct difference between a ProceduralTexture, which uses Images to create a Texture at load-time and a ShaderTexturingAlgorithm which uses Textures and combines them in a similar way at runtime. | + | One interesting thing to note is that we now have a distinct difference between a ProceduralTexture, which uses Images to create a Texture at load-time and a ShaderTexturingAlgorithm which uses Textures and combines them in a similar way at runtime. The main differences are that ShaderTexturingAlgorithm can change paramaters of this combination in realtime, but requires fairly modern graphics hardware to do so. |

| − | + | Here is a description of each class and what they are responsible for: | |

| − | + | * '''Texture''' - Represents an OpenGL texture. Holds a reference to it, knows how to bind and unbind itself to/from OpenGL. Also knows the length and width of the rendered terrain, which is necessary for some of the subclasses. | |

| + | * '''ImageFileTexture''' - Loads an image file into an OpenGL texture. | ||

| + | * '''ProceduralTexture''' - Loads multiple image files and combines them into one OpenGL texture based on the particular terrain. | ||

| + | * '''TriGridTexture''' - Creates an OpenGL texture with particular properties from geometric equations. | ||

| − | + | * '''TexturingAlgorithm''' - Controls the current texture bindings and how texture coordinates are generated. | |

| + | * '''BasicTexturingAlgorithm''' - Applies a texture to the terrain directly. | ||

| + | * '''MultitexturingAlgorithm''' - Applies a texture to the terrain directly and uses hardware multitexturing with another texture which is repeated a number of times over the terrain. | ||

| + | * '''TriGridTexturingAlgorithm''' - Applies an entire texture once per polygon. | ||

| + | * '''ShaderTexturingAlgorithm''' - Interfaces with OpenGL Shader Language (GLSL) shaders to texture and light the terrain using a number of textures. | ||

| − | == | + | == Extracting Texture Classes == |

[[Image:texture3.png|thumb]] | [[Image:texture3.png|thumb]] | ||

| − | + | Because ShaderTexturingAlgorithm contained a couple of Textures that it used, I thought it was a good idea to separate these. These Textures were the NormalMap and the HeightMapTexture. The NormalMap contains the normal vector directions at every point on the terrain and is used for lighting calculations in the Shader code. The HeightMapTexture (which is named so to distinguish it from the HeightMap class elsewhere in the code, which does, believe it or not, describe a different idea) is a monochrome texture which stores the height of the terrain at each point. This will be more accurate than the geometry itself which is an approximation of the heightmap. | |

| + | |||

| + | == Seperating Basic Shader Responsibilities From ShaderTexturingAlgorithm == | ||

| + | |||

| + | Shaders obviously embody a separate idea to the algorithm that uses them, so it makes sense to extract any shader interface code into at least another class. | ||

| + | |||

| + | The structure and use of shaders are as follows: | ||

| + | |||

| + | There are two main types of shaders, vertex and fragment shaders. These are pieces of code that can be loaded from files, but in my case they are always loaded from files. These are individually compiled, then attached to a shader program, where they are linked. To access uniform variables contained within the shader, the location of the variables must be known. The shader program can locate a variable given its name. It can also load a value into a variable given its location. The shader program can be set as the current shader program in execution. To return to the standard fixed function pipeline (no shaders), a null shader program needs to be used. | ||

| + | |||

| + | I decided to reflect this structure in classes as follows: | ||

| + | |||

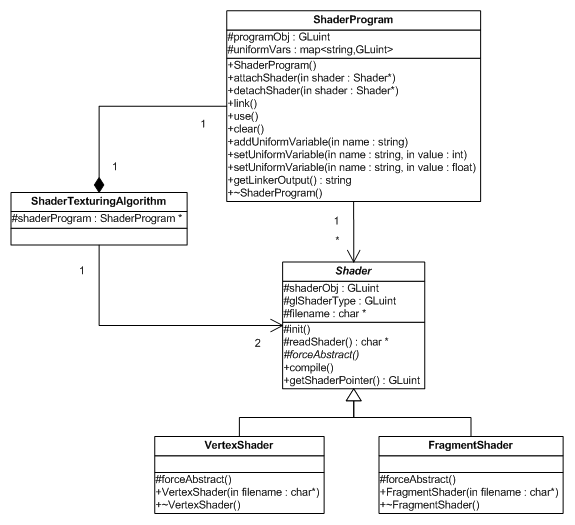

| + | [[Image:texture6.png]] | ||

| + | |||

| + | The ShaderProgram class wraps the GLSL shader program. It contains a map to store variable names and their locations. A shader wraps the GLSL shader. It is subclassed into the VertexShader and the FragmentShader. Shaders can be attached to a ShaderProgram after being compiled ((DbC: Precondition)). The ShaderProgram can then be linked. After linking the program can be used and cleared. | ||

| + | |||

| + | I decided to reflect the underlying model in the interface to these clases. That is, a shader needs to be explicitly compiled by the client after creation. These are then attached, one by one, to the shader program. The program then needs to be explicitly linked by the client. I could have compiled the Shader automatically directly after loading from the file (which is done in the constructor). I could have also given the ShaderProgram a list of Shaders to link automatically. The reason I have done this is that there may be situations I am unaware of where direct control like this is necessary. Also in the case of the shader program, there is no less complexity in creating a list to be passed to the constructor than in adding each Shader manually. | ||

| + | |||

| + | Design by Contract pre and post conditions?? | ||

| + | |||

| + | == Abstracting From the OpenGL Shader Language (GLSL) == | ||

| + | |||

| + | |||

== Final Design == | == Final Design == | ||

[[Image:texture4.png|thumb]] | [[Image:texture4.png|thumb]] | ||

| + | [[Image:texture5.png]] | ||

In this update, I abstract some of the low level aspects of shaders to their own classes. First we have the Shader class with its two subclasses VertexShader and FragmentShader. Unfortunately because there is no way to force a class to be abstract in C++, I had to make the forceAbstract() abstract method. Not sure if this is the best way to go about it, but I wanted to make sure that a generic Shader could not be constructed. The reason this cropped up is because there is very little difference between the two types of shader. The only difference is that when the shader is constructed in OpenGL, a constant has to be passed which tells OpenGL if it is a vertex shader or a fragment shader, but I thought it was worth subclassing like this so that the client (ShaderTexturingAlgorithm) doesn't need to know about this constant. | In this update, I abstract some of the low level aspects of shaders to their own classes. First we have the Shader class with its two subclasses VertexShader and FragmentShader. Unfortunately because there is no way to force a class to be abstract in C++, I had to make the forceAbstract() abstract method. Not sure if this is the best way to go about it, but I wanted to make sure that a generic Shader could not be constructed. The reason this cropped up is because there is very little difference between the two types of shader. The only difference is that when the shader is constructed in OpenGL, a constant has to be passed which tells OpenGL if it is a vertex shader or a fragment shader, but I thought it was worth subclassing like this so that the client (ShaderTexturingAlgorithm) doesn't need to know about this constant. | ||

| Line 92: | Line 118: | ||

One thing I wasn't sure about was how closely I should match the underlying API. I've ended up matching it fairly closely to how I used it. So a vertex shader needs compiling after being created, and a shaderProgram has each Shader attached manually, then finally needs to be linked. This could take a list of shaders or something instead, but I'm not sure if that would be any better. | One thing I wasn't sure about was how closely I should match the underlying API. I've ended up matching it fairly closely to how I used it. So a vertex shader needs compiling after being created, and a shaderProgram has each Shader attached manually, then finally needs to be linked. This could take a list of shaders or something instead, but I'm not sure if that would be any better. | ||

| − | |||

| − | |||

| − | |||

| − | |||

Revision as of 02:40, 30 September 2008

Contents |

Initial Design

Here is the initial design of the texturing side of the Terrain Renderer. The main piece of code contains one of each kind of Texture. The rendering can then be switched between each texture at runtime. Here's an overview of what each Texture does.

- ProceduralTexture - A texture made up of multiple images. When created, the images are arranged and blended based on the terrain heightmap (higher regions have snow, lower regions have grass etc). This texture is applied 1-1 to the terrain.

- DetailMap - A DetailMap augments the ProceduralTexture method by using hardware Multitexturing to blend some small details to the terrain. The details are repeated a set amount across the terrain.

- TriGridTexture - Mainly for debugging, a TriGridTexture is created mathematically and applied to each polygon of the terrain individually. This way the polygon structure and number can be seen easily.

- BasicTexture - An almost empty class which implements the simplest kind of texture. One that is loaded from a file. However, this particular Texture doesn't know how to draw itself. It is only used by ShaderSplat.

- ShaderSplat - ShaderSplat performs texturing and lighting using Shaders. It doesn't actually contain any texture information, instead it passes some BasicTextures through to the Shaders to process.

In addition to this there is the shader code which consists of two files, a vertex shader and a fragment shader which are compiled and linked at runtime.

Critique

At this stage, Texture seems to have several responsibilities and is therefore a violation of the Single responsibility principle. It is responsible for the OpenGL representation of a texture in video memory as well as how textures are applied to the terrain surface. This may not seem all that terrible, but it limits the capabilities of some of the methods. For example, in the case of the DetailMap, it can only contain a ProceduralTexture but there is no particularly good reason for this to be. If we allow it to contain an abstract Texture, this would allow it to contain a ShaderSplat Texture. Since both these Textures use the graphics hardware directly, it is extremely unlikely that these would cooperate.

ShaderSplat also seems to be exhibit the Large class smell. In particular it currently keeps track of the location of each uniform variable in the shader code as seperate variables. It also contains multiple other textures (not seperate Texture classes) to do its job, such as a normal map (which is technically lighting data, but is stored in a texture format and can still be displayed like any other texture). It also has a fairly large interface (which could possibly be a Fat interface), since all the interactive pieces of the shader are passed through this object (such as whether certain features are enabled and parameters like the height levels to assign different textures to).

Seperating Texture Responsibilities

To seperate the two responsibilities of Texture, I created another hierarchy, called TexturingAlgorithm. As the name suggests, it was inspired by the GoF Strategy design pattern as well as the State pattern. This is because although it represents a particular way of texturing, it also defines the OpenGL state for that method. The state is a combination of which Textures are assigned and whether shaders are enabled. useCoordsForPoint() is the main operation it is responsible for, where texture coordinates are generated for the particular vertex. Although not visible in the diagram, a number of TexturingAlgorithms are contained within the terrain rendering code. These algorithms can be switched at runtime (however, they need to be told this explicitly using the use() and clear() methods to setup and cleanup respectively, since it would be far too costly to do this for every vertex of every frame). In doing this I have essentially created classes defined by their behaviour, which is basically the same as using a verb for the class name (I suppose Texture would be the name if used as a verb, which would be confusing).

One interesting thing to note is that we now have a distinct difference between a ProceduralTexture, which uses Images to create a Texture at load-time and a ShaderTexturingAlgorithm which uses Textures and combines them in a similar way at runtime. The main differences are that ShaderTexturingAlgorithm can change paramaters of this combination in realtime, but requires fairly modern graphics hardware to do so.

Here is a description of each class and what they are responsible for:

- Texture - Represents an OpenGL texture. Holds a reference to it, knows how to bind and unbind itself to/from OpenGL. Also knows the length and width of the rendered terrain, which is necessary for some of the subclasses.

- ImageFileTexture - Loads an image file into an OpenGL texture.

- ProceduralTexture - Loads multiple image files and combines them into one OpenGL texture based on the particular terrain.

- TriGridTexture - Creates an OpenGL texture with particular properties from geometric equations.

- TexturingAlgorithm - Controls the current texture bindings and how texture coordinates are generated.

- BasicTexturingAlgorithm - Applies a texture to the terrain directly.

- MultitexturingAlgorithm - Applies a texture to the terrain directly and uses hardware multitexturing with another texture which is repeated a number of times over the terrain.

- TriGridTexturingAlgorithm - Applies an entire texture once per polygon.

- ShaderTexturingAlgorithm - Interfaces with OpenGL Shader Language (GLSL) shaders to texture and light the terrain using a number of textures.

Extracting Texture Classes

Because ShaderTexturingAlgorithm contained a couple of Textures that it used, I thought it was a good idea to separate these. These Textures were the NormalMap and the HeightMapTexture. The NormalMap contains the normal vector directions at every point on the terrain and is used for lighting calculations in the Shader code. The HeightMapTexture (which is named so to distinguish it from the HeightMap class elsewhere in the code, which does, believe it or not, describe a different idea) is a monochrome texture which stores the height of the terrain at each point. This will be more accurate than the geometry itself which is an approximation of the heightmap.

Seperating Basic Shader Responsibilities From ShaderTexturingAlgorithm

Shaders obviously embody a separate idea to the algorithm that uses them, so it makes sense to extract any shader interface code into at least another class.

The structure and use of shaders are as follows:

There are two main types of shaders, vertex and fragment shaders. These are pieces of code that can be loaded from files, but in my case they are always loaded from files. These are individually compiled, then attached to a shader program, where they are linked. To access uniform variables contained within the shader, the location of the variables must be known. The shader program can locate a variable given its name. It can also load a value into a variable given its location. The shader program can be set as the current shader program in execution. To return to the standard fixed function pipeline (no shaders), a null shader program needs to be used.

I decided to reflect this structure in classes as follows:

The ShaderProgram class wraps the GLSL shader program. It contains a map to store variable names and their locations. A shader wraps the GLSL shader. It is subclassed into the VertexShader and the FragmentShader. Shaders can be attached to a ShaderProgram after being compiled ((DbC: Precondition)). The ShaderProgram can then be linked. After linking the program can be used and cleared.

I decided to reflect the underlying model in the interface to these clases. That is, a shader needs to be explicitly compiled by the client after creation. These are then attached, one by one, to the shader program. The program then needs to be explicitly linked by the client. I could have compiled the Shader automatically directly after loading from the file (which is done in the constructor). I could have also given the ShaderProgram a list of Shaders to link automatically. The reason I have done this is that there may be situations I am unaware of where direct control like this is necessary. Also in the case of the shader program, there is no less complexity in creating a list to be passed to the constructor than in adding each Shader manually.

Design by Contract pre and post conditions??

Abstracting From the OpenGL Shader Language (GLSL)

Final Design

In this update, I abstract some of the low level aspects of shaders to their own classes. First we have the Shader class with its two subclasses VertexShader and FragmentShader. Unfortunately because there is no way to force a class to be abstract in C++, I had to make the forceAbstract() abstract method. Not sure if this is the best way to go about it, but I wanted to make sure that a generic Shader could not be constructed. The reason this cropped up is because there is very little difference between the two types of shader. The only difference is that when the shader is constructed in OpenGL, a constant has to be passed which tells OpenGL if it is a vertex shader or a fragment shader, but I thought it was worth subclassing like this so that the client (ShaderTexturingAlgorithm) doesn't need to know about this constant.

Secondly, we have the ShaderProgram class which links Shaders together into a program. It also keeps a map of uniform variables for that program (with the key as the name of the variable and the value as its location in the program). This has helped shrink ShaderTexturingAlgorithm a bit since it doesn't need to keep a reference to the location of these variables anymore. It still needs to know the names of these variables, but this is pretty much a necessary evil, since it has to know how to handle each variable anyway.

One good win is that the setup code for the shaders is a bit more readable. We go from this:

//initialise shaders

glewInit();

vobj = glCreateShader(GL_VERTEX_SHADER);

fobj = glCreateShader(GL_FRAGMENT_SHADER);

const char * vs = readShader("mult.vert"); //Get shader source

const char * fs = readShader("mult.frag");

glShaderSource(vobj, 1, &vs, NULL); //Construct shader objects

glShaderSource(fobj, 1, &fs, NULL);

glCompileShader(vobj); //Compile shaders

glCompileShader(fobj);

pobj = glCreateProgram();

glAttachShader(pobj, vobj);

glAttachShader(pobj, fobj);

glLinkProgram(pobj);

GLsizei *length = NULL;

GLcharARB *infoLog = new GLcharARB[5000];

glGetInfoLogARB(pobj, 5000, length, infoLog);

cout << infoLog;

To this:

//initialise shaders

Shader *vertexShader = new VertexShader("mult.vert");

Shader *fragmentShader = new FragmentShader("mult.frag");

vertexShader->compile();

fragmentShader->compile();

shaderProgram = new ShaderProgram();

shaderProgram->attachShader(vertexShader);

shaderProgram->attachShader(fragmentShader);

shaderProgram->link();

cout << shaderProgram->getLinkerOutput();

One thing I wasn't sure about was how closely I should match the underlying API. I've ended up matching it fairly closely to how I used it. So a vertex shader needs compiling after being created, and a shaderProgram has each Shader attached manually, then finally needs to be linked. This could take a list of shaders or something instead, but I'm not sure if that would be any better.