Terrain Renderer Design

Contents |

First Ideas

COSC460 - Texturing methods for terrain rendering using GPU based methods

Probably won't be suitable for several reasons. I did not create and probably will have no need to modify the majority of the code as it covers the main terrain rendering algorithm (ROAM) etc. Most of my time is spent in the shader code itself, which exists outside of object orientedness. Of course there will still be design considerations (use of functions, code repetition etc) but it would be difficult to apply most of our OO knowledge. Shaders are also very picky in their implementations so I have had to throw some ideas out the window (functions, arrays, dynamic branching and loops are fairly recent developments in shader land).

On the other hand, I know for a fact I have violated some decent design considerations. I know I have at least one case of inheritance for implementation. Or perhaps there's a bigger problem. One red flag may be that I have been primarily editing one class, whose primary job is to interface with the shaders.

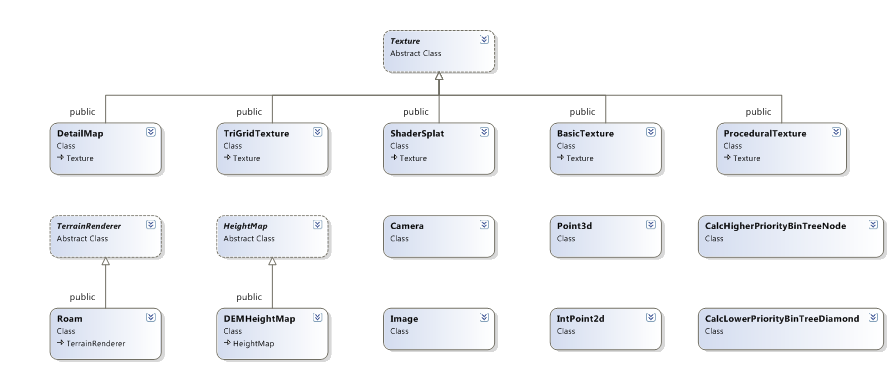

Here is a class diagram taken directly from Visual Studio (using C++). Not really UML, doesn't even show compositions.

In addition to this there is the shader code which consists of two files, a vertex shader and a fragment shader which are compiled and linked at runtime.

I only created two of these classes; ShaderSplat and BasicTexture. I have also edited DEMHeightMap a little.

One thing to note: Texture is a bit of a misleading name for the abstract class. It should probably be called something more along the lines of TexturingMethod. Basically the terrain can be rendered with different "Textures" and this can be changed at runtime. ProceduralTexture is the simplest (BasicTexture is even more misleading in that I don't think it will work the same as the others). DetailMap actually contains a ProceduralTexture and uses multitexturing to blend with a detail texture. TriGridTexture uses a method that allows a polygon to always be textured by a full copy of a texture, meaning individual polygons are clearly visible. ShaderSplat is my method that uses shaders.

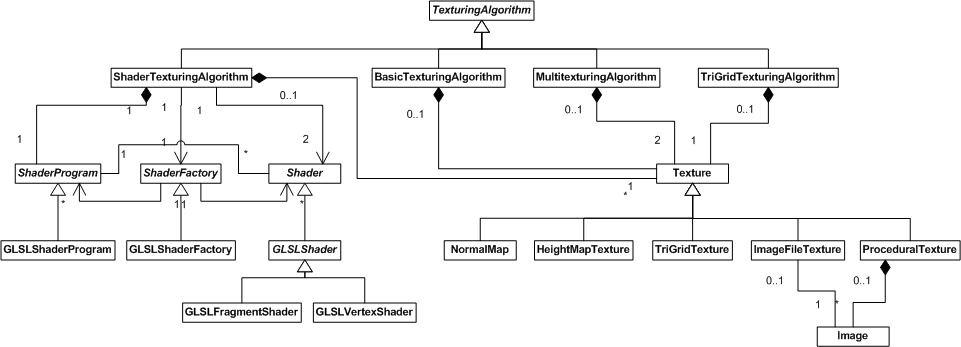

--Kris 04:24, 4 August 2008 (UTC) I'm actually warming to the idea of using this for my project. Here is a class diagram. It only covers the Texture hierarchy (plus Image since that is closely related).

Hmm...well it's shouting out Decorator/Composite to me except for one thing. DetailMap and ShaderSplat are completely at odds with each other. That is, it would be impossible for ShaderSplat to use a DetailMap for its texture and vice versa. It would also be impossible for a ShaderSplat to contain a ShaderSplat etc.

First Redesign

The biggest thing that was bugging me about the initial design was that Texture seemed to be encapsulating two separate ideas. One was that it represented an OpenGL texture loaded into video memory on the graphics card. The other was the way in which textures were applied to the terrain. A major clue that this was happening was the way in which subclasses handled the textureID attribute. DetailMap was using it as well as keeping another texture and ShaderSplat never even used it!

So for my first redesign, I seperated Texture into two hierarchies. One, still called Texture, encapsulated the idea of a texture in OpenGL. Subclasses of this cover textures that are loaded from files (ImageFileTexture), textures that are created as combinations of other textures (ProceduralTexture) and textures that are created mathematically (TriGridTexture).

The other hierarchy I called TexturingAlgorithm. It is similar to a GoF Strategy design pattern. It covers how the terrain is textured and usually a TexturingAlgorithm will contain a number of Textures to do its job. A BasicTexturingAlgorithm contains one texture and simply applies it one-to-one to the terrain. A MultiTexturingAlgorithm has 2 textures. The textures are blended together (using hardware multitexturing) and applied to the terrain. A TriGridTexturingAlgorithm has one texture and applies it once for every polygon on the terrain (together with TriGridTexture it is a way of showing individual polygons clearly). A ShaderTexturingAlgorithm currently uses 5 explicit Textures and uses GPU shaders to apply these textures in various ways at runtime.

One interesting thing to note is that we now have a distinct difference between a ProceduralTexture, which uses Images to create a Texture at load-time and a ShaderTexturingAlgorithm which uses Textures and combines them in a similar way in real-time.

Current Problems

One thing that is not obvious from the class diagram (but if you look carefully you might find some methods that point it out), but currently ShaderTexturingAlgorithm actually contains more than the 5 explicit Textures. It creates a normal map (which is a kind of texture that contains lighting information) and passes that through to the GPU. I should actually split this into a seperate subclass of Texture. It also creates something called a distance map, which is experimental and doesn't work correctly. I may end up deleting it, so I won't worry about that for now.

ShaderTexturingAlgorithm of course exhibits the Large class smell and there are some things I can do to help remedy this. One is that I could separate some aspects of Shaders into separate classes. I still have to think about this some more, but I could have a Shader class. Or even better, reflect the OpenGL internals by having a ShaderProgram class which contains a VertexShader and a FragmentShader. One thing that makes ShaderTexturingAlgorithm look larger than it really is, is that it has to keep reference to a number of variables in the shader code (called uniform variables). I could create a UniformVariable class and keep a collection of these in the ShaderProgram class. Each UniformVariable would have a name (as it is in the shader code) and a type (ah, but this might lead to type switches..I'll need to think about that one). A ShaderProgram could then be told to load a value into one of its UniformVariables and the correct OpenGL function needs to be called based on that variables type. Or I could pass that responsibility off by having multiple methods for different types which correspond directly to the OpenGL functions.

Second Redesign

--Kris 05:10, 22 September 2008 (UTC)

Actually, this is a pretty minor update. I've just pulled the NormalMap out of ShaderTexturingAlgorithm. I also pulled out HeightMapTexture, which I had actually lumped in with the normal map (using the alpha channel). In doing this update I ended up with some nasty bugs which took me a while to fix. So ShaderTexturingAlgorithm is still as large as anything, hopefully my next redesign will be major enough to help with that.

Third Redesign

--Kris 01:35, 23 September 2008 (UTC)

In this update, I abstract some of the low level aspects of shaders to their own classes. First we have the Shader class with its two subclasses VertexShader and FragmentShader. Unfortunately because there is no way to force a class to be abstract in C++, I had to make the forceAbstract() abstract method. Not sure if this is the best way to go about it, but I wanted to make sure that a generic Shader could not be constructed. The reason this cropped up is because there is very little difference between the two types of shader. The only difference is that when the shader is constructed in OpenGL, a constant has to be passed which tells OpenGL if it is a vertex shader or a fragment shader, but I thought it was worth subclassing like this so that the client (ShaderTexturingAlgorithm) doesn't need to know about this constant.

Secondly, we have the ShaderProgram class which links Shaders together into a program. It also keeps a map of uniform variables for that program (with the key as the name of the variable and the value as its location in the program). This has helped shrink ShaderTexturingAlgorithm a bit since it doesn't need to keep a reference to the location of these variables anymore. It still needs to know the names of these variables, but this is pretty much a necessary evil, since it has to know how to handle each variable anyway.

One good win is that the setup code for the shaders is a bit more readable. We go from this:

//initialise shaders

glewInit();

vobj = glCreateShader(GL_VERTEX_SHADER);

fobj = glCreateShader(GL_FRAGMENT_SHADER);

const char * vs = readShader("mult.vert"); //Get shader source

const char * fs = readShader("mult.frag");

glShaderSource(vobj, 1, &vs, NULL); //Construct shader objects

glShaderSource(fobj, 1, &fs, NULL);

glCompileShader(vobj); //Compile shaders

glCompileShader(fobj);

pobj = glCreateProgram();

glAttachShader(pobj, vobj);

glAttachShader(pobj, fobj);

glLinkProgram(pobj);

GLsizei *length = NULL;

GLcharARB *infoLog = new GLcharARB[5000];

glGetInfoLogARB(pobj, 5000, length, infoLog);

cout << infoLog;

To this:

//initialise shaders

Shader *vertexShader = new VertexShader("mult.vert");

Shader *fragmentShader = new FragmentShader("mult.frag");

vertexShader->compile();

fragmentShader->compile();

shaderProgram = new ShaderProgram();

shaderProgram->attachShader(vertexShader);

shaderProgram->attachShader(fragmentShader);

shaderProgram->link();

cout << shaderProgram->getLinkerOutput();

One thing I wasn't sure about was how closely I should match the underlying API. I've ended up matching it fairly closely to how I used it. So a vertex shader needs compiling after being created, and a shaderProgram has each Shader attached manually, then finally needs to be linked. This could take a list of shaders or something instead, but I'm not sure if that would be any better.

As suggested by David here's a simpler version of the class diagram: