Warfarin Design

(→Critique) |

(→Critique) |

||

| Line 62: | Line 62: | ||

= Critique = | = Critique = | ||

| − | * Open/close principle .One of the main problems here for me personally is the lack of flexibility. When creating an arff file (formated historical data used to "learn" a model) only limited set of functions(hardcoded) could be used to do so(sum,avg,delta,min,max).In the case when we would like to add a ratio as an a function to be used many parts of the code would need to be touched. The computation at the moment is done in DataPointGenerator by using ''ComputeValue(used for computing data using aggregate functions) and ComputeDeltaValue(used to compute delta value)''. | + | * [[Open/close principle]] .One of the main problems here for me personally is the lack of flexibility. When creating an arff file (formated historical data used to "learn" a model) only limited set of functions(hardcoded) could be used to do so(sum,avg,delta,min,max).In the case when we would like to add a ratio as an a function to be used many parts of the code would need to be touched. The computation at the moment is done in DataPointGenerator by using ''ComputeValue(used for computing data using aggregate functions) and ComputeDeltaValue(used to compute delta value)''. |

= Ideas for improvements = | = Ideas for improvements = | ||

Revision as of 12:04, 30 July 2010

Contents |

Background

Warfarin predictor was a result of my cosc366 research project. It is a web based application that uses machine learning algorithms to predict a right dose for hart-valve transplant patients. The importance of determining a correct dose comes from a fact that there is a very real danger of blood clots occurring on the heart-valve of a patient . In order to prevent blood clotting Warfarin is taken by patients as a blood thinner. By taking a right dose a patient has a minimal chance of clotting, while still ensuring that patient has enough clotting ability so that he or she does not bleed to death.

Even though I did try to do this project in OO style, the functionality that needed to come out of it were more important for the project so I haven’t spent too much time on putting it in right OO space. There is a good chance I will need to work on this project again ( add some more functionality) in the near future so before I do start I would like to see it being refactored.

Requirements

Initial Requirements

- System users: administrators, doctors.

- Administrators can add new doctors.

- Doctors adds patients, patient records.

- Main use case is to record a new blood test (INR reading), get a dose prediction.

- Dose predictions may be returned in several ways, probably not advisable to just give the dose to prescribe because of liability issues. For example, could give a graphical representation of the likelihood of being below the safe range, in range or above.

- The system needs to be able to "learn" a model from the historical data. This involves transforming the data into a special format (an "ARFF" file) and submitting this to the learning algorithm.

- The data-to-arff step needs to be flexible (i.e. able to be easily changed if the learner's data requirements change.

- Models will need to be persisted.

- The data needs to be reliably persisted.

Future requirements

- There may be a need to record other data for the patient, possibly temporally, such as diet, other drugs taken, smoking and alcohol consumption.

- Generate graphs from multiple classifiers to present to the doctor

- Investigate other ensemble methods (combine the prediction coming from different machine learning)

- Investigate using more classes (e.g. not just high and low but also very high and very low)

Initial design

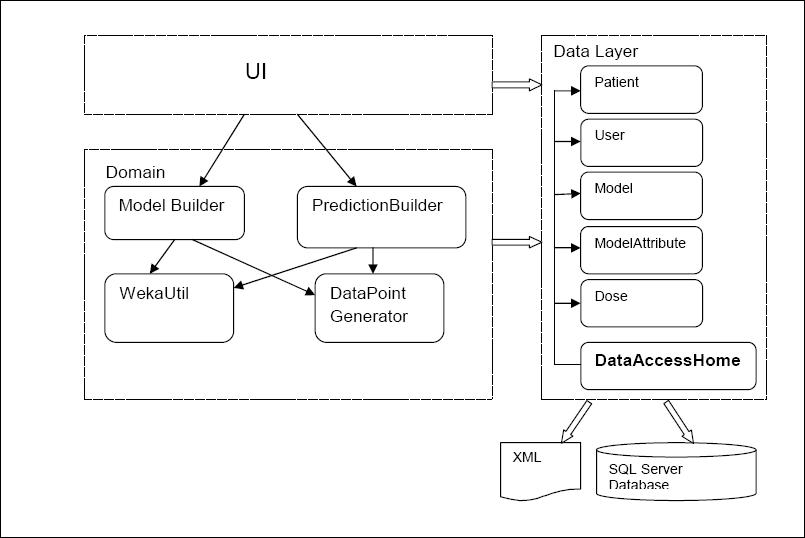

Domain logic is made out of 4 classes:

- ModelBuilder wraps up the logic for new model creation and the updates of existing models. It makes appropriate calls to WekaUtil and Datapoint Generators and data source as needed. Once the model is created and updated it invokes the data layer methods for persisting the model to the database.

- PresentationBuilder gives back the INR prediction. It uses DataPoint Generator to make the datapoint , and then it passes it to the WekaUtil to get the prediction for it. It also interprets Weka’s result depending on whether the predicted INR reading needs to be nominal or numeric. It is also responsible for making appropriate calls to data layer to persist (log) completed predictions.

- DataPointGenerator has the datapoint creation logic. It loops through the patient’s dose history and based on the attributes selected it calculates the amounts, comma separates them and puts them in to the datapoint. Its final product is an arff file (made of numerous datapoints) needed for model creation and updates as well as for making a prediction.

- WekaUtil process the API calls to Weka in order to physically create and update a model, persist it to the file, calculate the accuracy level of model created as well make a prediction on the generated datapoint.

Data Layer is primarily responsible for storing persistent data. It communicates with an SQL server database (reading and write data to) and xml file (reading data).The pattern used as a starting point for this layer was Table Data Gateway pattern, where an object acts as a Gateway to a database table. As this pattern suggests there should be an object for each physical table in database where table data Gateaway “holds all the SQL for accessing a single table or view: select, inserts, updates and deletes. Other code calls its methods for all interaction with the database.” (Fowler 2003) This pattern was slightly adapted when designing our prototype due to fact that our problem hasn’t been addressed as big enough to have Table Data Gateway for each table in database, it was considered that one Table Data Gateway(DataAccessHome) would be enough to handle all methods for all table (as suggested for simple apps).

Due to the nature of the problem where we would be able to group all users’ actions into three main streams: create model, update model or making a prediction. Those actions could then be broken down into the many subroutines, where many of those would be shared between those main action points. It then makes a perfect sense to organise our domain logic as suggested in Transaction Script pattern. A Transaction Script is essentially a procedure that takes input from the presentation, processes it with validations and calculations, stores data in the database, and invokes any operations from other systems.(Fowler,2003) So it made a pefrect sence for me to use this pattern at the time. But was I right in doing that????

Critique

- Open/close principle .One of the main problems here for me personally is the lack of flexibility. When creating an arff file (formated historical data used to "learn" a model) only limited set of functions(hardcoded) could be used to do so(sum,avg,delta,min,max).In the case when we would like to add a ratio as an a function to be used many parts of the code would need to be touched. The computation at the moment is done in DataPointGenerator by using ComputeValue(used for computing data using aggregate functions) and ComputeDeltaValue(used to compute delta value).